As the massive Artificial Intelligence Act (AI Act) is slowly making its way through the EU legislative process[1], a new set of questions on the interaction between the AI Act and Free, Libre and Open Source Software (FLOSS) development practices has arisen. These questions focus on how new liability, transparency and accountability requirements introduced in the AI Act to regulate the use of high-risk AI systems may discourage open source development of AI systems, and more specifically, foundational (also referred to as General Purpose models) open source AI models.

This discussion can be traced back to a post by Alex Engler (Brookings) titled “The EU’s attempt to regulate open-source AI is counterproductive,” which has subsequently been picked up in a number of other outlets and organizations representing the interests of free and open source developers and companies.

These discussions coincide with a remarkable rise of foundational AI models being released under open source licenses[2] since this summer. The most prominent of these are Big Sciences BLOOM large language model and Stability.ai’s Stable Diffusion[3].

From the perspective of open source developers and their advocates, there are concerns that provisions in the AI Act (specifically the provisions on the responsibilities of AI systems providers indicated in Chapter III of the Act) may have chilling effects on the open source development of foundational AI models/systems. This is because it will be difficult and/or unattractive for distributed teams of developers to comply with these provisions in a meaningful way, and because projects would potentially face liabilities for downstream uses of their models over which they have no control.

This is problematic since the emergence of open-source AI models is a positive development: it brings more transparency to the field, lessens the dominance of a small number of well-resourced AI providers over the development and use of AI, and has the potential to empower smaller (European) players.

Against this background, open-source advocates and representatives of companies contributing to the development of open-source AI models are advocating for provisions that would remove Open Source AI systems/models from the scope of the AI Act (at least until they are used as part of high-risk applications) and/or exclude foundational AI systems from the scope of the Act.

At the same time, a broad coalition of civil society organizations has emphasized that, for the AI Act to provide meaningful protections against high-risk uses of AI systems, GPAI systems must be included in the scope of the Act, and the obligations imposed on them must remain meaningful.

This raises the question of how far it is possible to protect open source development of AI systems/models from potential chilling effects that result from the AI Act, while being mindful of the danger of introducing loopholes that would exempt malicious actors from the requirements for the dissemination and use of high-risk AI systems. While this question is distinct from the question if GPAI systems should be included in the scope of the act, there are substantial overlaps between the two that further complicate finding an approach for safeguarding this objective.

Three different approaches

We can currently distinguish three different proposals for dealing with open-source AI systems in the draft AI Act: the original Commission proposal, the general approach adopted by the Member States and the approach proposed in the opinion of the legal affairs committee (JURI) of the European Parliament.

The operative elements of these approaches are the obligations in Title III Chapters 2 (Requirements for High-Risk AI systems) and Chapter 3 (Obligations of Providers and Users of High-Risk AI Systems and Other Parties) and how these obligations should be applied to providers of Open Source AI systems.

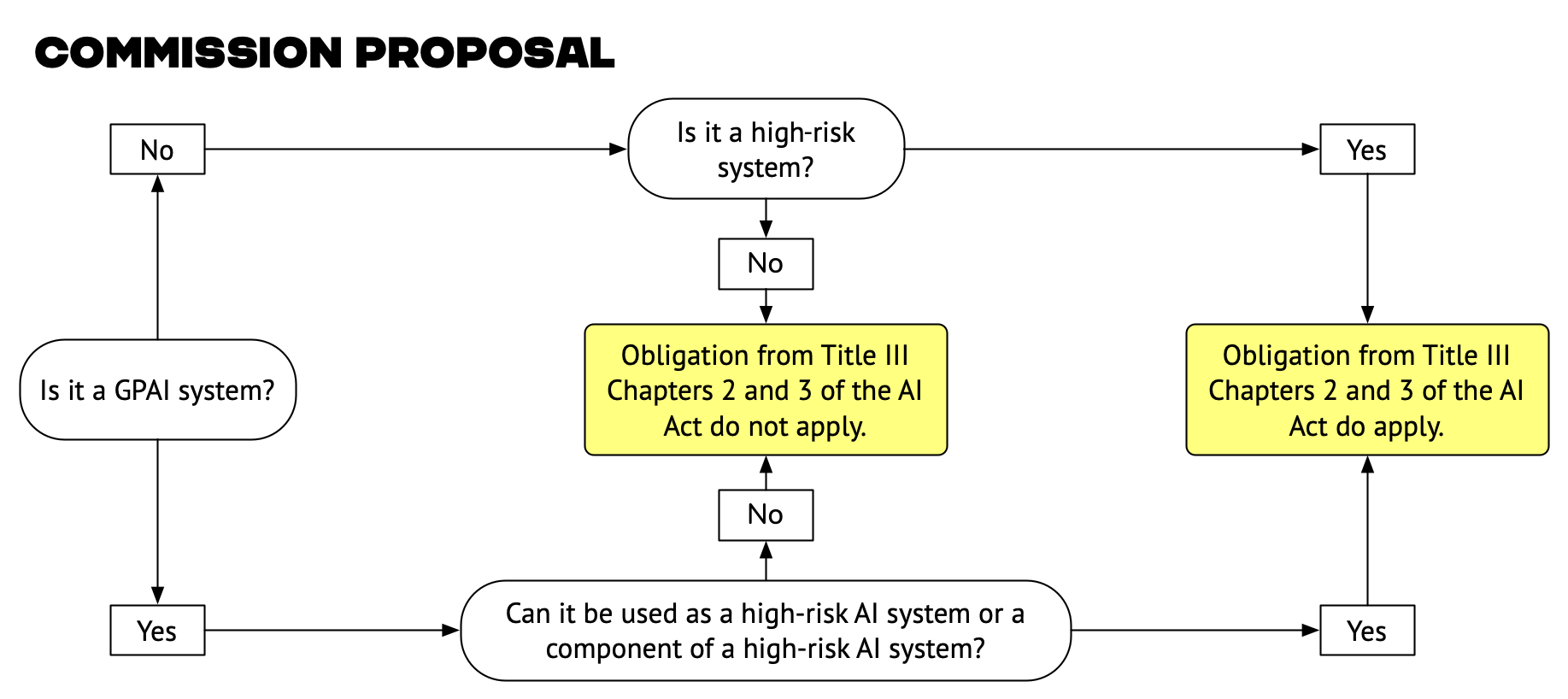

The original Commission proposal only applies the relevant regulatory requirements to High-Risk uses of AI, rather than regulating the availability of an AI system/model as such. As a result, AI systems capable of executing multiple tasks would be out of scope unless they can be used as (part of) high-risk systems. This approach is agnostic regarding the development methods (open or closed source).

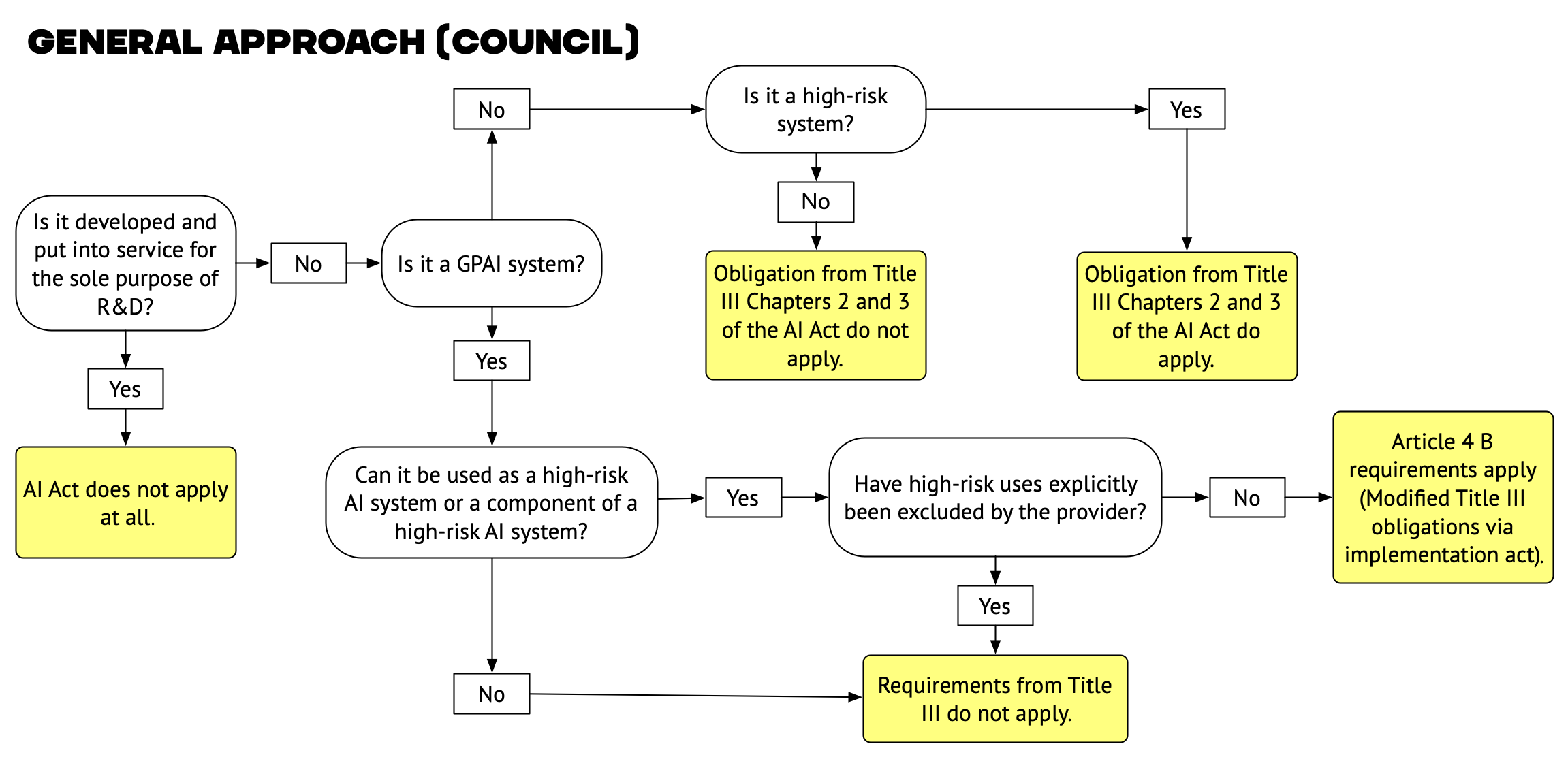

The Council’s general approach adopted last month adopted a different strategy. It explicitly includes GPAI systems in the Act’s scope but contains a number of relatively wide exceptions. The requirements from Title III would apply to GPAI in modified form (based on implementing acts), unless the system in question: is developed and put into service for the sole purpose of R&D; cannot be used as a high-risk system; the provider has explicitly excluded high-risk uses; or the provider is a micro, small or medium enterprise.

None of these conditions cover Open Source AI systems as such. It is possible that the condition to exclude high-risk uses could be fulfilled by Open Source AI systems made available under the so-called Open RAIL. However, this scenario raises questions about the enforcement of and the legal basis for such restrictions[4]

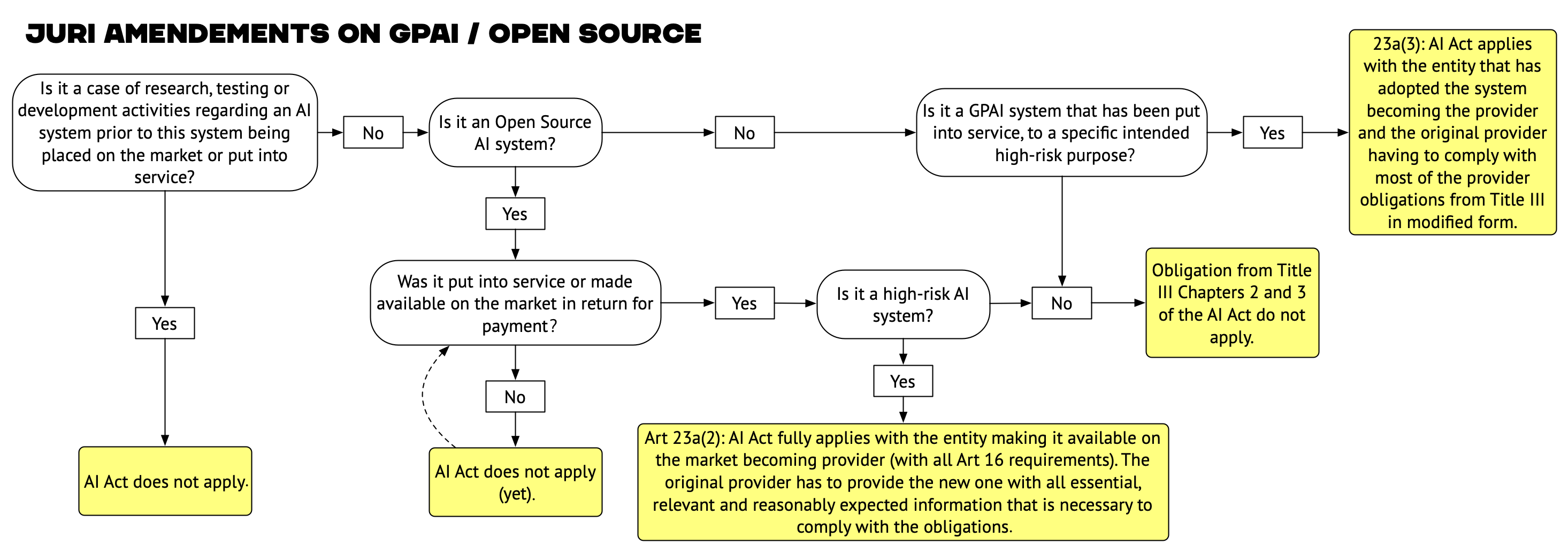

On the side of the European Parliament, the amendments contained in the JURI opinion from 12 September explicitly address both GPAI and Open Source. They would include GPAI in the scope of the Act but would exclude open Source AI systems, until the point where they are put into service or made available on the market “in return for payment, regardless of if that payment is for the AI system itself, the provision of the AI system as a service, or the provision of technical support for the AI system as a service.”

Once Open Source AI systems are put on the market for payment (by the entity developing them or another entity) or are used for high-risk purposes, the provisions from Title III apply. Yet, the burden of compliance is moved from the original provider to the entity that has put the system on the market, while the original provider would need to collaborate on some of the information provision obligations with the new one[5].

The way forward

None of the approaches discussed above satisfactorily address our concerns. Both the Commission’s proposal and the Council’s general approach ignore the concerns related to chilling effects on open-source AI development. While acknowledging the specificity of open-source AI systems, the approach discussed in JURI fails at convincingly balancing the competing objectives of limiting chilling effects on open-source AI development and maintaining an adequate level of regulatory oversight for high-risk uses of such systems.

In a recent policy brief on the challenge posed by general-purpose AI systems for the AI Act, the Mozilla Foundation proposes that the Act…

… should take a proportionate approach that considers both the special nature of the open source ecosystem as well as the fact that open source GPAI is released with more information than its proprietary equivalent, along with, for example, better means to validate provided information and test the capabilities of GPAI models. GPAI released open source and not as a commercial service should therefore be excluded from the scope of the approach outlined above if the information necessary for compliance is made available to downstream actors. This could contribute to fostering a vibrant open source AI ecosystem, more downstream innovation, and important safety and security research on GPAI.

This approach, which could build on explicit recognition of the special characteristics of open-source AI systems introduced in the JURI opinion, seems better suited to resolve the competing concerns identified above. While the authors of the Mozilla policy brief do not further specify what they consider to be “the information necessary for compliance,” it feels right that they propose to leverage the inherent transparency advantage of open-source software development. In the context of the AI Act, such transparency requirements should at least include:

- Standardized Information about the model in the form of model cards.

- Standardized Information about the data used to train, validate and (where appropriate) fine-tune the model in the form of data sheets.

- Access to the data that has been used for training and finetuning, at the minimum, to the extent required for the purpose of auditing the model.

- Licensing conditions that guarantee the right of licensees and third parties to audit and explain the behaviors of models.

By providing this information alongside open-source AI models, the developers of these models would create the conditions for downstream users, who apply the models for uses classified by the AI Act as high-risk, to comply with the obligations based on information that the developers already have. Instead of facing potential difficulties in complying with obligations arising from downstream uses ex-post, developers would need to comply ex-ante with a clearly defined set of transparency obligations that benefit not only the downstream users but all other third parties that may have an interest in understanding such models. As argued by Mozilla, this approach would make an important contribution to assuring the viability of open-source development of AI technologies that provides an important alternative to proprietary development models.

By drawing inspiration from this approach, the European Parliament, which is expected to discuss the treatment of GPAI models later this week, still has the opportunity to address the concerns raised by the open-source community without jeopardizing the AI Act’s overall regulatory objective.

Footnotes

- The Member States adopted their general approach on 25 November, while the EU Parliament is still working its way through the file.^

- While these licenses are not compatible with the Open Source Initiative’s definition of an open source license because they restrict a number of high-risk and/or ethically fraught uses of the licensed models, these licenses allow open source style collaborative development across institutions, meaning that the models are published in the open and that they can be reused, modified and deployed. (The RAIL licenses are derivatives of Apache licenses).^

- See also my colleague Alek Tarkowski’s Notes on BLOOM, RAIL and openness of AI from September of this year.^

- There is currently no legal certainty that copyright-based licenses (such as the open RAIL licenses) create legally binding restrictions when applied to AI models.^

- There have also been discussions about a language that would entirely remove the duty of providers of open source AI systems to collaborate with downstream providers of the high-risk AI system based on them.^