Supporting Open Source and Open Science in the EU AI Act

Today — together with Hugging Face, Eleuther.ai, LAION, GitHub, and Creative Commons, we publish a position paper on Supporting Open Source and Open Science in the EU AI Act. The paper was prompted by the European Parliament’s report on the AI Act and, specifically, the inclusion of rules that provide safeguards for the use of so-called foundation models (see our previous analysis here). These rules will form the basis for discussion in the trilogue negotiations in the fall of this year. In this statement, we propose an approach that balances the need for safeguards with the value of open source development of AI systems as input for the trilogue negotiations.

While the rules for foundation models (including generative ML models) are very welcome, the organizations that drafted the statement share a common concern that, as currently envisioned, they will impose restrictions on open source development of AI/ML systems. As such, they will put open source developers at a structural disadvantage compared to the large technology companies, with their largely closed approaches to development.

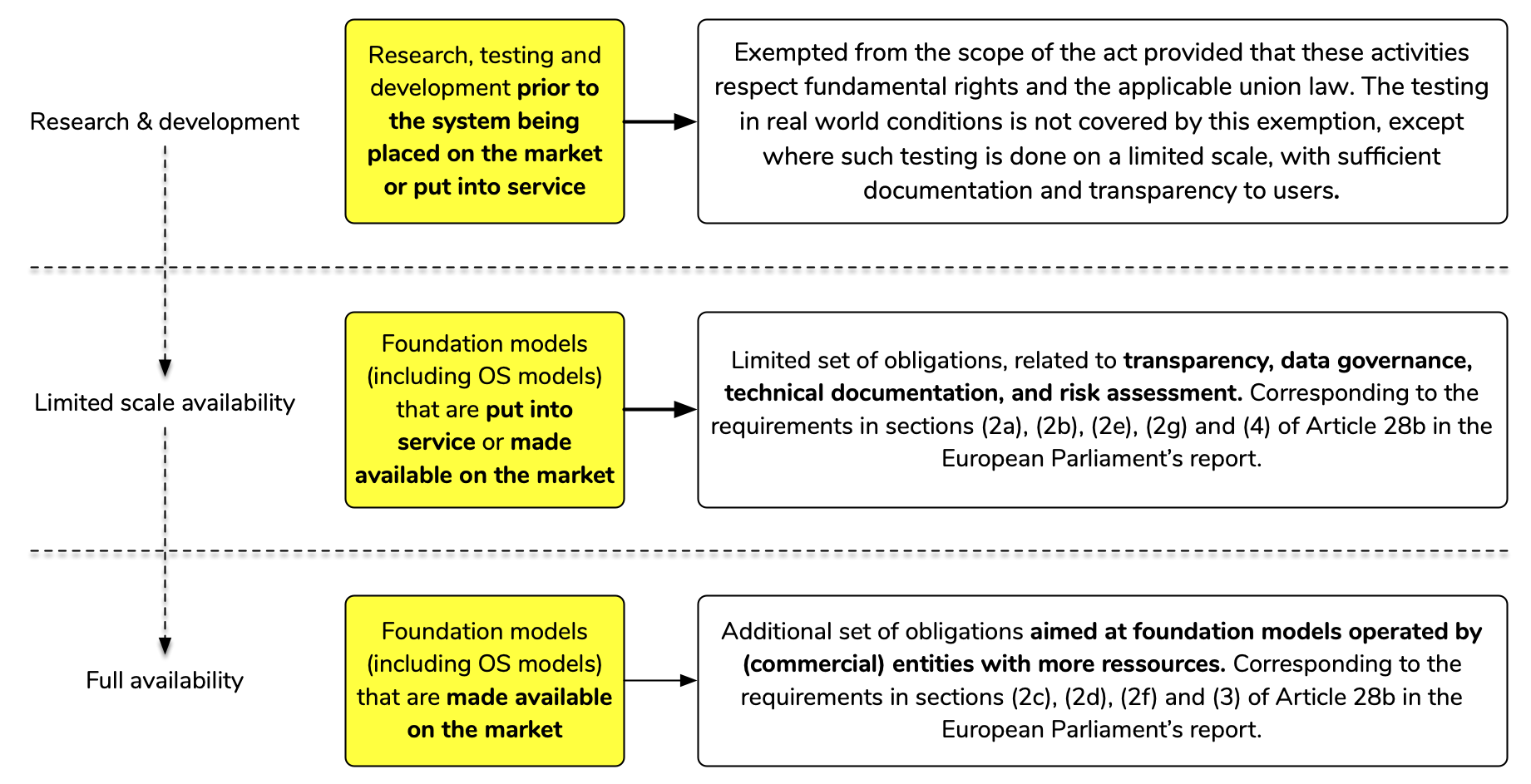

In the position paper, we propose a proportionate approach to regulating foundation models that builds on the Parliament’s proposal but makes certain requirements conditional on the commercial use of such models. While the paper also calls for expanding the research exemption to allow limited real-world testing, it explicitly does not propose a full-fledged exemption for open source foundation models.

As part of the paper, we provide a comprehensive overview of the open ecosystem of AI development, highlighting the importance of open development practices for democratic control and supporting open and transparent scientific research practices in this rapidly evolving field. We argue that broad access to functional AI systems and open general-purpose models is both a necessary and a valuable part of open and accountable AI development. Openness is, therefore, an essential element for ensuring that European values will be upheld in this area of technological development.

Proportionate requirements for foundation models

Our proposal for proportionate requirements for foundational models recognizes that there is much overlap between the values behind the AI Act and existing open source AI development practices. For example, the commitment to transparency and documentation, that is at the core of the open source development approach. On the other hand, some of the requirements for foundational models introduced in the European Parliament’s report seem to be based on the assumption that the development of foundation models can only be done by well-resourced commercial entities. As we point out in the paper, this is not the case, and as a result, some of these requirements, such as the requirement to implement quality management systems or to provide technical documentation for a period of 10 years, will act as a deterrent to open source development of foundation models, thereby cementing the dominance of large technology companies in this area.

Therefore, our paper proposes applying a set of baseline requirements that ensure meaningful transparency, data governance, technical documentation, and risk assessment to all foundation models that are “put into service” or “made available on the market”. The remaining requirements contained in the proposed Article 28b of the EP report should only apply to foundation models that are “made available on the market”, which in the terminology of the AI Act means that they are used in the course of a commercial activity. As a result, the requirements for increased institutional capacity will only apply when such models are deployed on a commercial scale.

We strongly believe that open source and open science are the building blocks of trustworthy AI and should be promoted in the EU, and we have confidence that with the proposed changes to the rules for foundation models, the AI Act can safeguard the existing open ecosystem of AI development.