Seeing like an algorithm: A closer look at LAION 5B

Researchers at Knowing Machines have published Models all the way down, a visual investigation that takes a detailed look at the construction of the LAION 5B dataset “to better understand its contents, implications, and entanglements.” The investigation provides detailed insight into the internal structure and strategies used to build one of the largest and most influential training datasets used to train the current crop of image generation models. Among other things, the researchers show that the model’s curators relied heavily on algorithmic selection to assemble the model, and as a result…

…there is a circularity inherent to the authoring of AI training sets. […] Because they need to be so large, their construction necessarily involves the use of other models, which themselves were trained on algorithmically curated training sets. […] There are models on top of models, and trainings sets on top of training sets. Omissions and biases and blind spots from these stacked-up models and training sets shape all of the resulting new models and new training sets.

One of the key takeaways from the researchers (who, for all their critical observations, give LAION credit for releasing the dataset as open data) is that we need more dataset transparency to understand the structural configuration of today’s generative AI systems, which is very much in line with what we’ve been advocating for in the context of the AI Act and will continue to push for in the implementation of the Act.

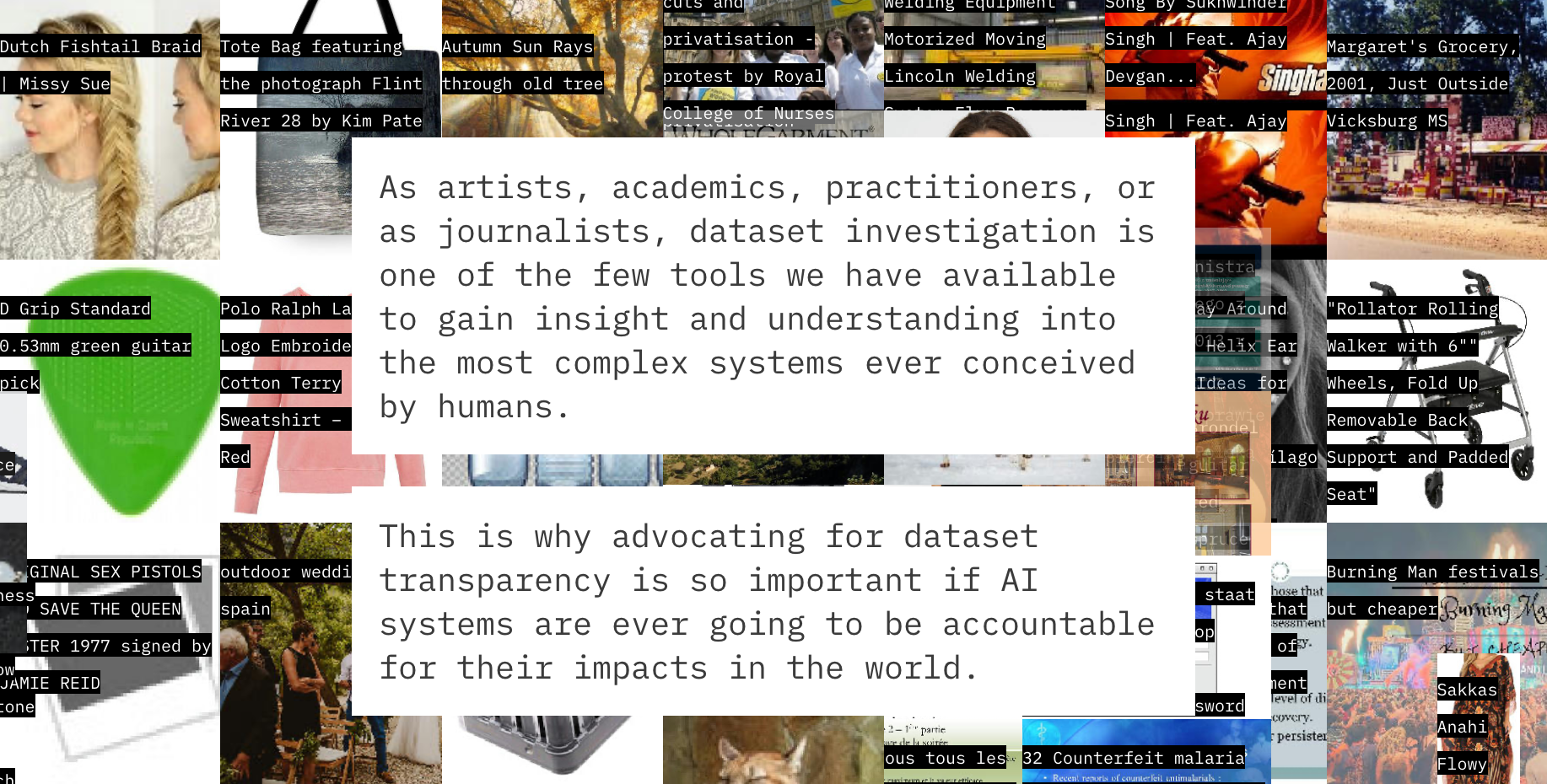

Screenshot from Models all the way down, © Knowing Machines