In this article, our 2023 fellow Nadia Nadesan, maps AI Governance practices from different cities and examines what it means when technologies encounter the local social, cultural, and political context. You can read more about Nadia and learn about our fellowship program here.

The world of AI has the appearance of boundless momentum, especially since Open AI’s latest release of Chat GPT-4. An endless slew of AI tools has come out to do everything from writing emails to planning vacations. The speed of AI seems to transcend borders and slip through bureaucratic policy processes. The EU Artificial Intelligence Act proposed in 2021 has been sent again to the drawing board now that the chatbot can draft speeches, profess love, and even lie when required to complete a task. With EU rule-makers again restrategizing, the question arises what are the possibilities of AI governance? And who will get to participate?

Friction and AI Governance

AI governance captures a wide range of processes and conversations, from internal governance policies for companies to public, national, and transnational regulatory bodies. For example, AI governance can refer to nation-states that set global precedents, such as the United States and the EU member states, or to multilateral spaces, such as the IGF, which provides a space for public, civil society, and private stakeholders to discuss the direction of policies related to AI. Therefore, there is a certain ambiguity about what AI governance refers to. For this blog series, I intend to map places where friction in this seemingly effortless and inevitable flow of technology occurs.

To better understand what I mean by friction, I refer to Anna Tsing’s work on globalization, where she defines it as the “resistance…to the transnational flow of goods, ideas, people, and money”. In her work on globalization, Tsing uses friction to describe how global economies can be bumbling and stumbling in local contexts bungling through local cultural contexts and bureaucracies. The conversations and narratives on the possibilities of technology surrounding data and AI are reminiscent of the excitement in the 90s of globalization’s limitless possibilities. However, these narratives often obscure moments and spaces where these possibilities slow down when they reach local, situated, and concrete realities of a physical place and people.

Because in the sticky, friction-full moments where bureaucracy, networks of civil society, and everyday people weigh in and define the trajectory of policies, peoples, goods, and ideas is where governance processes or oversights come to light.

Observing Governance from the Scale of the City

In a first attempt to map the sticky friction of AI governance, I aim to use cities to highlight stakeholders at local, national, and transnational scales, along with governance practices used to implement, regulate, and monitor AI as public city infrastructure. By situating governance in the city, I can observe the local and more concrete systems and parameters through which power is distributed to collectively decide on regulations and priorities that are set to ensure transparency, accountability, and maintenance of the city. When AI is framed as a part of the city’s infrastructure, there are norms and historical, collective expectations in structures for ownership. Additionally, within this purview, AI governance’s role in the implementation and maintenance is to ensure that it is used ethically and responsibly and that there is access to accountability. Using AI to support public infrastructure should, in theory, make AI part of a public good for which there are mechanisms and practices accessible to public and civic stakeholders to shape. I expect to encounter how it’s implemented, regulated, and monitored or where there are lapses and city governments must catch up in ways obscured at the transnational level of governance alone.

To make this case, I want to refer to the Radical AI podcast ‘Transparency as a Political Choice,’ where the guest, Mona Sloane, asks the audience to imagine roads and highways. In this imaginary, Tesla has built the streets and highways, and therefore the residents must petition Tesla for any issues, such as repairs. Sloane posits that most people would be horrified at such a situation because if the road is constructed with the aim for public use, then one expects a public body to “ensure equitable use, care, and accountability.” However, the norm of entrusting public entities to construct and maintain digital public goods is problematic because it’s often a seemingly invisible infrastructure that can bypass these public expectations. By now, AI forms a hidden part of the city’s infrastructure, and because it goes unseen, it can be more challenging to discuss and understand tangibly.

The Possibility of a Place

With this example of imagining streets and roads, I want to highlight that using more local or conceivable concrete contexts allows me and others to have expectations, historical references, and ideas about what digital public infrastructure should look like and how it should be managed. Using more minor scales helps explain and extrapolate abstract visions of what commons should look like by referring to historical memory and practices around open pastures and parks.

While cities might appear too local for the grandiose scale that AI companies attempt to implement, cities and physical places such as towns or villages can connect transnationally, which is only sometimes seen through regions or provinces. They can even take a stand against national, federal, and state policies like in the case of the sanctuary cities jurisdiction. Besides this ability to work locally and transnationally, cities offer concrete places to address accountability issues and develop bottom-up strategies for exercising agency in the governance process by actors beyond the public, state, and private corporate entities. Despite being the cornerstone to gaining traction, online demands for accountability are vulnerable to the privatized platforms on which they take place. Physical places and their politics play a role in facilitating or creating friction in governance processes.

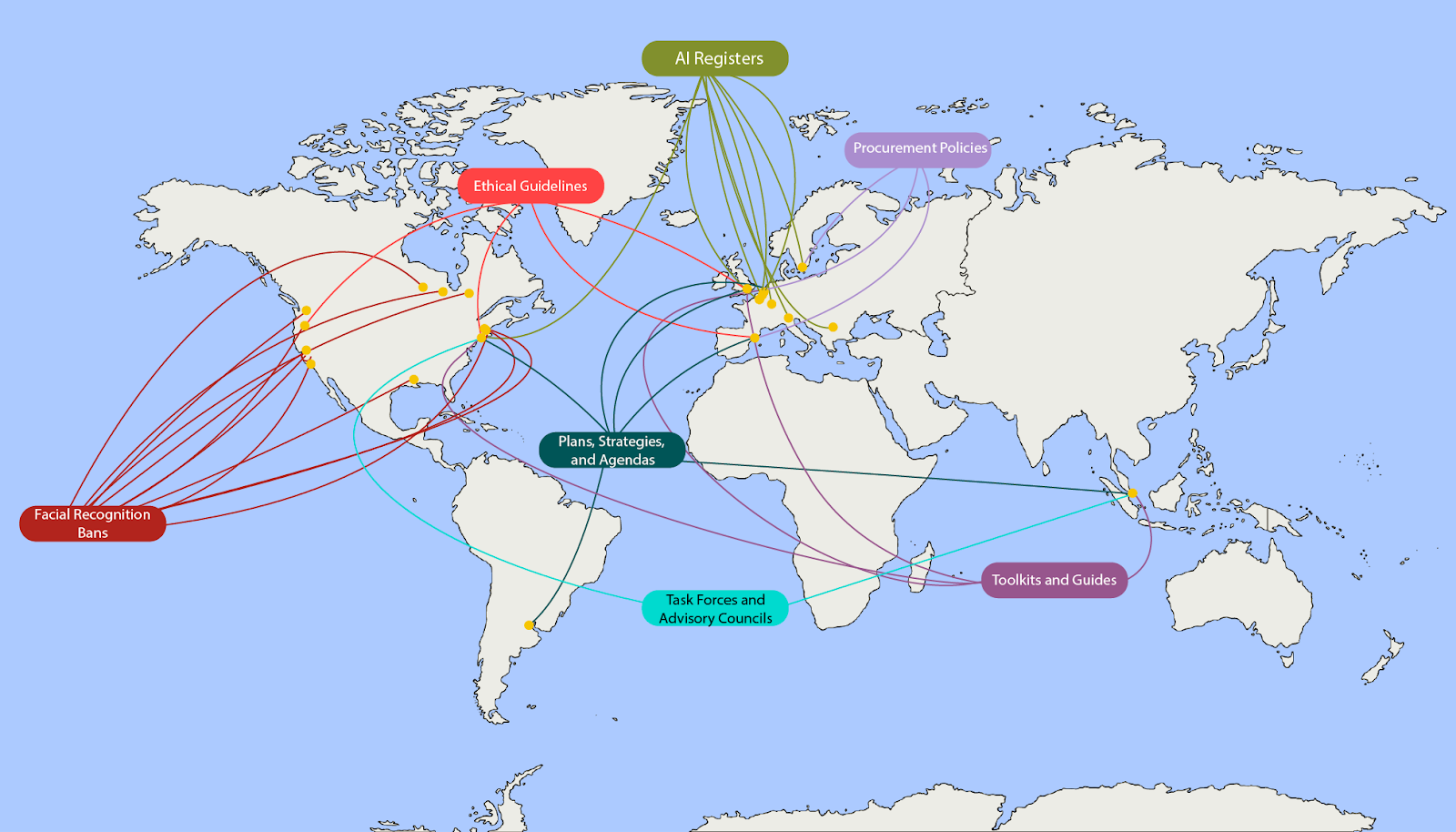

Mapping AI Governance Practices

To track AI governance, I started looking for city initiatives such as Cities for Digital Rights and expanding my search from academic references to communications directed at civil society. Framing governance through the city was very useful in limiting my scope with the plethora of material on AI governance. Then, to narrow it down even more, I chose only to include examples that appeared more than once to see if there were any interesting patterns or typologies that arose. For example, this mapping does not cover one-off policies such as ‘Barnets Bästa’ in Lund, a policy for child-centered AI. Additionally, this mapping does not include uses of AI but reiterates focuses on processes of governance that oversee AI systems in the city.

My primary sources for finding these initiatives were:

It might be helpful to think of governance as a living, mutable process to frame city governance practices. A process has a life cycle, i.e., a beginning, middle, end, or iteration, and beginning again. There are many ways to name and identify the phases. Still, these life cycles indicate the maturity, power, and experience of the systems and the people implementing them. This is an idea I want to keep in mind while mapping. Do we reach a point of iterating these policies that are transparent and clear? Ethical guidelines are great, but how do we fulfill them through maintenance, monitoring, evaluation, and consultation?

Broadly the more prevalent practices that I identified and mapped as a part of AI governance in the city were the following:

- Procurement policies – An AI procurement policy for a city is a set of guidelines and regulations that dictate how public organizations in the town should procure and deploy AI systems. Such policies are designed to ensure that AI systems used by the public sector are transparent, fair, and ethical.

- Ethical Guidelines – AI ethical guidelines are principles and recommendations to ensure that AI is developed and used responsibly and ethically. These guidelines ensure the technology is developed and used transparently, accountable, and respects fundamental human rights.

- Plans, Agendas, and Strategies – E-government strategies and plans range from planning automated infrastructure in the city for traffic, bringing to life the idea of the smart city, to building upon previous experience with AI to create new systems,

- AI Registers – A city AI register is a database containing information about the artificial intelligence systems being used or deployed within a particular city. The register can include details such as the type of AI system, its intended purpose, the data it uses and collects, the algorithms it employs, and the individuals or organizations responsible for its development and deployment. Many cities have implemented an AI register to increase transparency in using AI within the city.

- Task Forces and Advisory Councils – These refer to bodies that advise and monitor the use of AI and its impact on the city.

- Facial Recognition Bans – A city facial recognition ban is a policy or regulation that prohibits or restricts the use of facial recognition technology within the boundaries of a particular city. Facial recognition bans are related to AI because facial recognition technology is a form of artificial intelligence that uses machine learning algorithms to identify individuals based on their facial features.

- AI Czar – Czar is a curious name considering that it is derived from Caesar, i.e., a Roman emperor. But the Biden administration and New York City created and appointed AI Czars to serve as experts responsible for overseeing and coordinating the development and implementation of artificial intelligence policies and strategies.

What is missing? Unaddressed governance practices

There is currently an absence of conversation around the fact that many governance practices do not directly address AI projects that started almost a decade ago. Over the past decade, there have been many oversight instances when AI was implemented, sometimes without the city government’s full awareness. One example is in New Orleans, where Palantir, a data mining company initiated with seed money from the CIA, partnered with the New Orleans police department for a predictive policing initiative. City council members were initially unaware of the program’s existence because it did not go through a public procurement procedure and instead was framed as pro bono philanthropic work. Similar initiatives shrouded in secrecy or framed as philanthropy have appeared in Los Angeles and New York. City governments need help to catch up with the existence of the technology and also grapple with the significant impact it has already made on public infrastructure. However, this narrative is lost in projects such as the Atlas of Urban AI, where initiatives and failures around surveillance and predictive policing still need to be addressed or included. Conversations around AI and its implementation in city infrastructure have been around for over a decade. The implicit narrative of the project and AI registers paints an all too benign picture of linear progress that does not adequately inform the public of the harms and costs — both environmental and human — or the choices that are available to opt out of AI systems which were often created and are now maintained by private corporations.

AI, especially in contexts lacking transparency and access to accountability, poses enormous risks to democratic societies and governments.

These risks include amplifying inequality and discrimination through the bias in these systems, creating more mechanisms for disinformation, and reinforcing concentrations of power and capital between ravenous tech companies and governments. Through this blog series, I want to address how governance can anticipate AI’s future and how systems might create access to accountability where governance oversight has occurred. Starting with cities, i.e., concrete places and people, we can have more meaningful discussions about technologies.