Friction in AI Governance: there’s more to it than breaking servers

In her last post, Friction and AI Governance: Experience from the Ground, Nadia shared learnings from facilitating a citizen assembly concerning the AI Act. In this article, she examines collective bargaining as an essential element of AI governance. You can read more about Nadia and learn about our fellowship program here.

The issue with informed consent and the perceived lack of agency

In the fourth season of Black Mirror, a British speculative-fiction TV series, one of the episodes depicts actors Selma Hayek and Annie Murphy breaking into a streaming corporation, Streamberry, to destroy a server to reclaim their likeness, data, and lives. They do so after their respective lawyers explain that the agreements they signed with Streamberry are unbreakable and that any complaint or accusation against the company would fall apart in court. Annie signed away her rights by clicking ‘agree’ to the terms and conditions when signing up for the platform. While Annie’s lawyer explained the lack of legal recourse, she presented him with the terms and conditions in the form of a menacing stack of paper, saying: “You’ve probably never seen it printed out before.”

The image of the contract in the form of a thick, impenetrable paper tower speaks to the obscure and opaque nature of consent in the context of technology. Consent is often framed as tedious, irritating, and, at worst, induces mental fatigue, so clicking “agree” or “allow” becomes vastly easier than navigating any alternative. The scene highlights the resignation and powerlessness that individuals face when interacting with technology. It also signals a difficulty with opting out.

The episode illustrates fears and anxieties around the consequences that might result from a lack of awareness of our data and the bargaining power people have in the face of big tech. Annie and Salma’s visits to their lawyers realistically depict legal processes and frameworks such as GDPR, procurement, or licensing as sites of friction where individuals, organizations, civil society, companies, and public entities encounter each other. These sites are the cornerstone to structuring who, when, what, and how technology, such as AI, is developed and implemented. However, these sites are inaccessible because of the resources and legal expertise needed to make an impact or a case, shutting out the possibility of wider participation. So what could more meaningful engagement AI governance look like, aside from breaking in and destroying servers?

The argument for collective bargaining

The 2023 Guild of America strike was an excellent example of a successful collective action against unfair working conditions and exploitative data extraction and extrapolation of creative content and actors’ likenesses with AI. The strike results include a new contract that establishes bonuses to writers based on viewership data on streaming services, sets minimum staffing requirements in TV writers’ rooms depending on the length of the season, and imposes limits on the use of artificial intelligence. This was not the last time technology and AI threatened working conditions and fundamental rights. However, the success of the strike points to the importance of unions or spaces and places for building a critical mass around collective participation and action.

Where and how was this participation and action built? Despite the global reach of Hollywood, writers, and actors were striking in person in Los Angeles, with writers picketing outside major studios. While media and capital flows seem impervious to being stuck in one place, striking in LA made a difference to the velocity of the production of the films and series we all see on our screens. Strikers out with their signs and protesting their work conditions for nearly five months was the biggest disruption to the industry since the COVID-19 pandemic.

Reimaging Governance Possibilities and Interventions

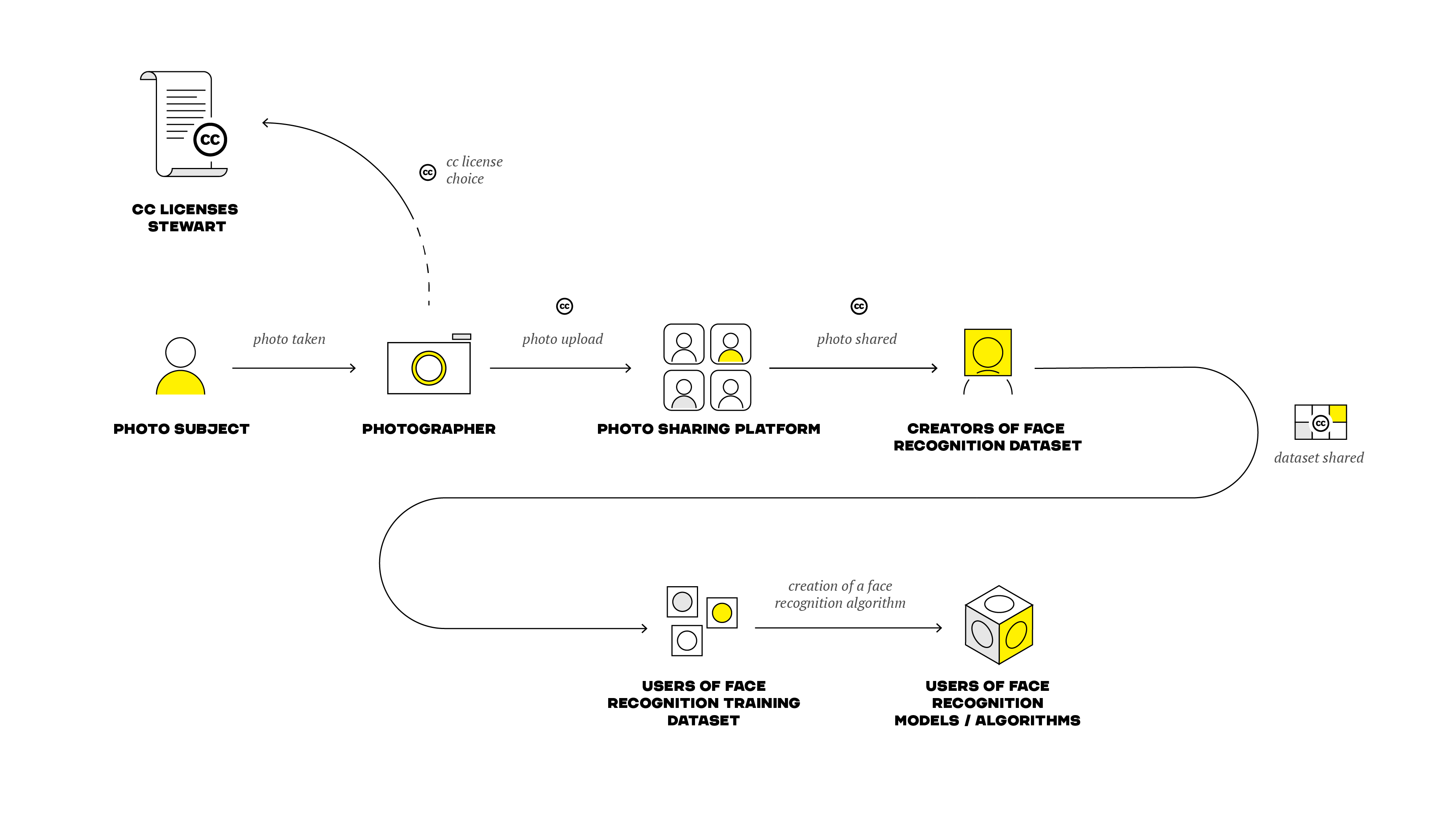

Salma Hayek and Annie Murphy’s characters beg the question of how we can safeguard our individual image, our rights, and our lives in the age of AI. As the AI Commons report by Open Future stated, the search for accountability frameworks and legal solutions to the challenge of reclaiming or negotiating the use of one’s image is still ongoing. It is especially important when images from platforms that store digital commons, including Flickr and Wikimedia, are used to develop and train AI models and systems. With the rise of the generative models, creators are concerned that the machines can now consume their works, reassemble them, and spit out synthetic content that very much resembles outputs previously produced only by humans. Below is the diagram from the AI Commons report, illustrating how licensing could serve as a means of protection against misuse of an image.

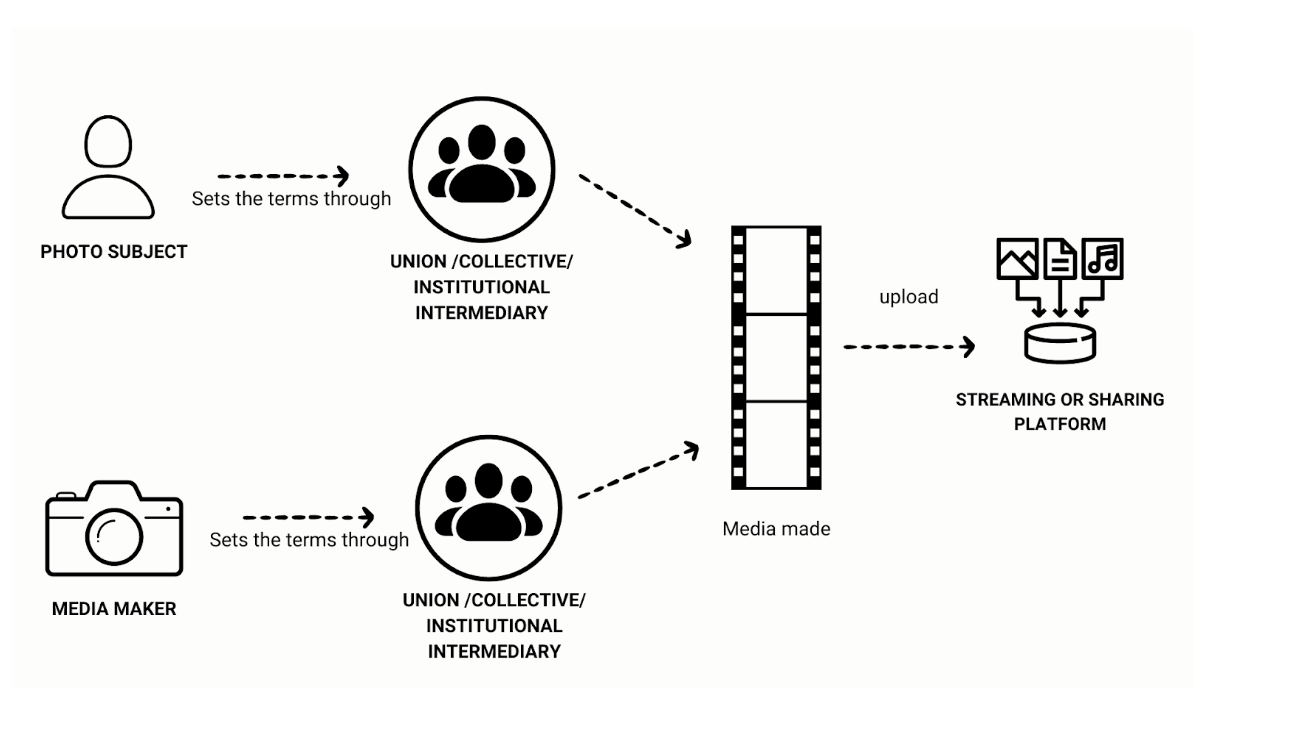

However, the recent Guild of America strike demonstrated that there are other spaces to collectively bargain the use of a person’s image and likeness. New formulations of collective bargaining could instead look like the following, if it was to include people mobilizing via unions or other local and regional spaces.

The diagram is a broad idea of how collective bargaining could look with intermediaries supporting and facilitating conversations around consent and data with larger corporations, platforms, and digital services. One, unfortunately, failed European example of such organizing has been Datavakbond, which translates to “Data Labor Union” in the Netherlands. With more initiatives like this, perhaps we will see a future where people look to physical places and people to demand change since AI systems have thus far brought on some egregious examples of oversight and poor implementation of both private and public goods and services.

Conclusion

In the words of Michael Running Wolf, the founder of Indigenous in AI: “If you go into a community with a mindset that data is just a resource, a monetary valuable thing, you’re fundamentally harming the community, and you’re also diminishing the value of this data.” When corporations abstract people and their data into numbers and profit, we see the violent and vicious rhetoric of waiting for Hollywood strikers to go hungry and homeless.

As more extractive and unethical business models shape AI development, there appears to be a more clear and present need for spaces for collective bargaining and negotiation.

How data is extracted and reconfigured to inform our movies, mapping services, and hiring processes impact more and more facets of everyday life. Data is more than just a plot point or number. It is an essential part of a product cycle that has material consequences on our quality of life. Going forward, AI governance must occur in different physical places, from civic spaces and workplaces to neighborhoods where labor is performed, encompassing the diverse contexts in which AI impacts our lives.