Blog

Common Pile and Institutional Books datasets chart two pathways for AI Commons

The newly released Common Pile by Eleuther.ai and Harvard Institutional Data Initiative's Institutional Books 1.0 demonstrate that Public Domain and openly licensed works can power robust AI development while addressing legal concerns.

Joint Statement on Building a Dynamic, Resilient and Sovereign Technology Ecosystem

We have signed this joint statement urging EU leaders to invest in open, sovereign digital infrastructure and reduce platform dependence.

Europe Talks Digital Sovereignty

The EU takes steps toward digital sovereignty with new policies, but success requires substantial investment in public digital infrastructure.

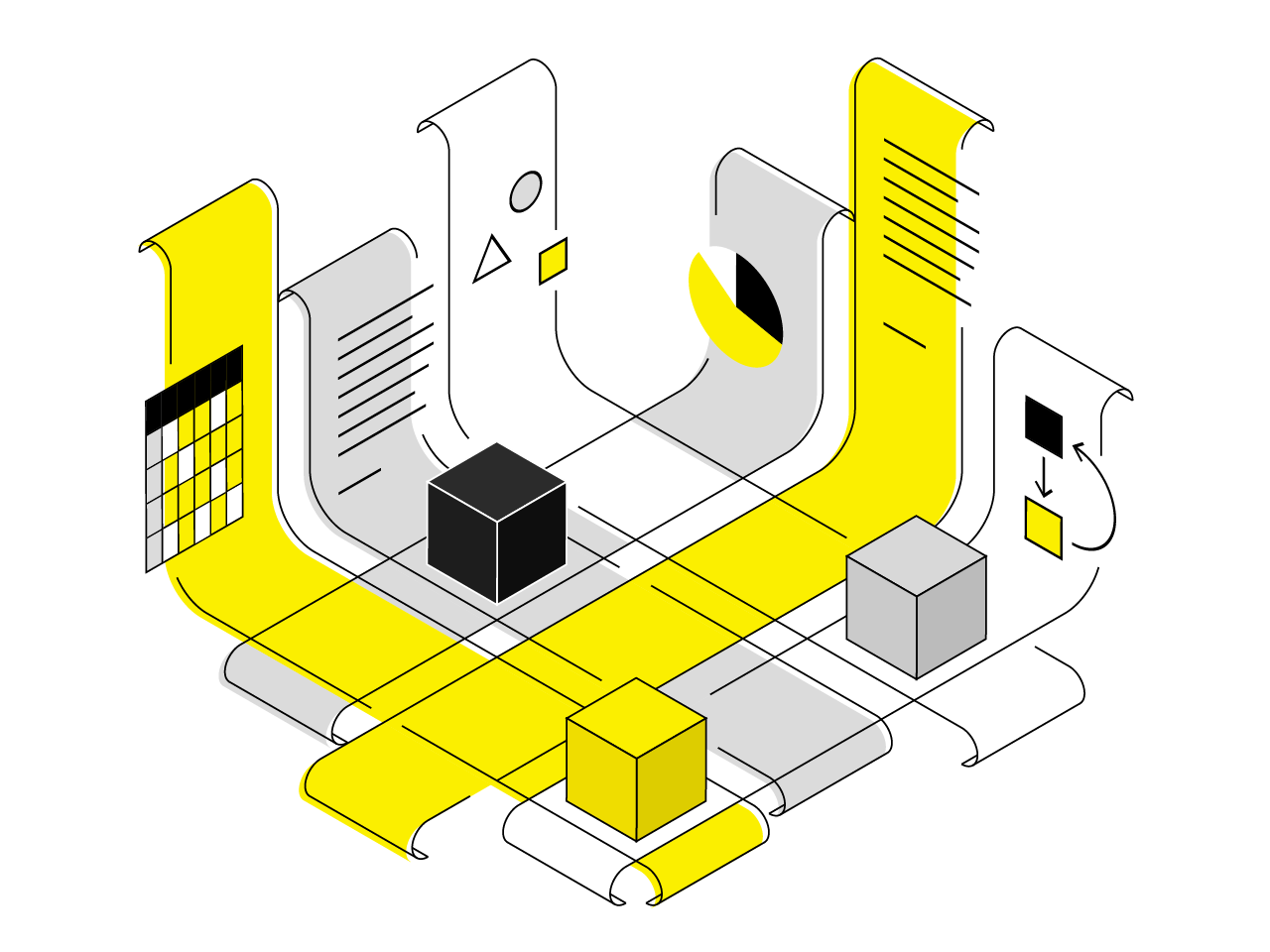

Leveraging Public Spending for Digital Sovereignty

The EU is rethinking how public procurement can drive digital sovereignty. Still, its market-focused approach falls short of what is needed to build public digital infrastructure that serves the public interest.

IETF working group will further develop our proposal for an opt-out vocabulary

The IETF AI Preferences Working Group has adopted Open Future's opt-out vocabulary proposal as their starting point for developing international standards for expressing AI preferences on the open internet.

Gutting AI transparency in the name of deregulation will not help Europe

The EU's rush to simplify AI regulation risks weakening transparency rules. This regulatory shift threatens core principles needed for responsible AI development.

From AI Factories to Public Value

The AI Continent Action Plan needs a stronger vision of purposeful AI deployment if it wants to achieve more than just boost commercial AI development.

Meet Aditya Singh, our new Senior Policy Analyst

As Senior Policy Analyst at Open Future, Aditya brings nearly a decade of experience in digital rights, data governance, and technology ethics. He will lead our activities in the NGI Commons project.

Is Web Scraping the Only Copyright Concern for AI?

The EU's AI Code of Practice has a blind spot: it only limits copyright compliance requirements to web crawling. This narrow focus ignores other data collection methods—such as torrenting—potentially creating loopholes in AI training data regulations.

Looking for an Exit: Europe’s Way to Public Digital Infrastructures

The EU must fund and govern digital commons as part of long-term public infrastructure to escape a cycle of dependency on US or Chinese tech firms.

Deregulation disguised as Sovereignty

A draft ITRE committee report on technological sovereignty largely echoes the EU’s industrial agenda, but also fuels a deregulation push. Europe needs public digital infrastructures that serve citizens, not just business interests.