On 29 November 2021, the UK Information Commissioner’s Office (ICO) announced its intention to impose a potential fine of just over £17 million on Clearview AI. Clearview AI Inc is an American facial recognition company claiming to have built “the largest known database of 3+ billion facial images”, and describing itself as the ‘World’s Largest Facial Network’ – the New York Times has the whole story covered. Clearview AI Inc relies on the vast collection of images from publicly available online sources to create datasets for machine learning, which are then sold to private and public actors worldwide to train their facial recognition systems. ICO found this practice incompatible with UK national data protection laws, following the complaints advanced by Privacy International and other civil society organizations.

A similar fate is awaiting the company in the European Union. On similar legal grounds, the French privacy watchdog – the CNIL – recently ordered Clearview AI to immediately cease the collection and use of French residents’ data as well as order its deletion. Clearview AI now has a period of two months to comply with the order; if not sanctions will be levied.

These recent developments outline the sensitive nature of publicly available information in the age of Artificial Intelligence, including situations where images are openly licensed. Open Future is studying this tension as part of AI_Commons – an ongoing research and policy design process launched in collaboration with Exposing.ai by Adam Harvey – which investigates the recent 2019 IBM-Flickr case where permissive Creative Commons licenses allowed the collection of photos of human faces by the surveillance industry worldwide. Users were not aware that their uploaded images were being used for such purposes as they did not consent in the data uploading stages.

The main objective of AI_Commons is to better define how governance of shared resources can balance open sharing with the protection of personal data and privacy. One of these solutions is offered by a design-led perspective.

Aniek Kempeneers – a recent double MSc Graduate from Delft University of Technology – has been contributing to AI_Commons in the past months. As part of her graduation project under DCODE, Aniek investigated how design concepts for consent practices can provide an added layer of protection for users’ privacy in the online sphere. A dedicated AI_Commons session was hosted At MozFest 2022 to look at the scalability of design-led solutions to similar use cases.

Consent practices and disclosure interactions in the context of digital platforms

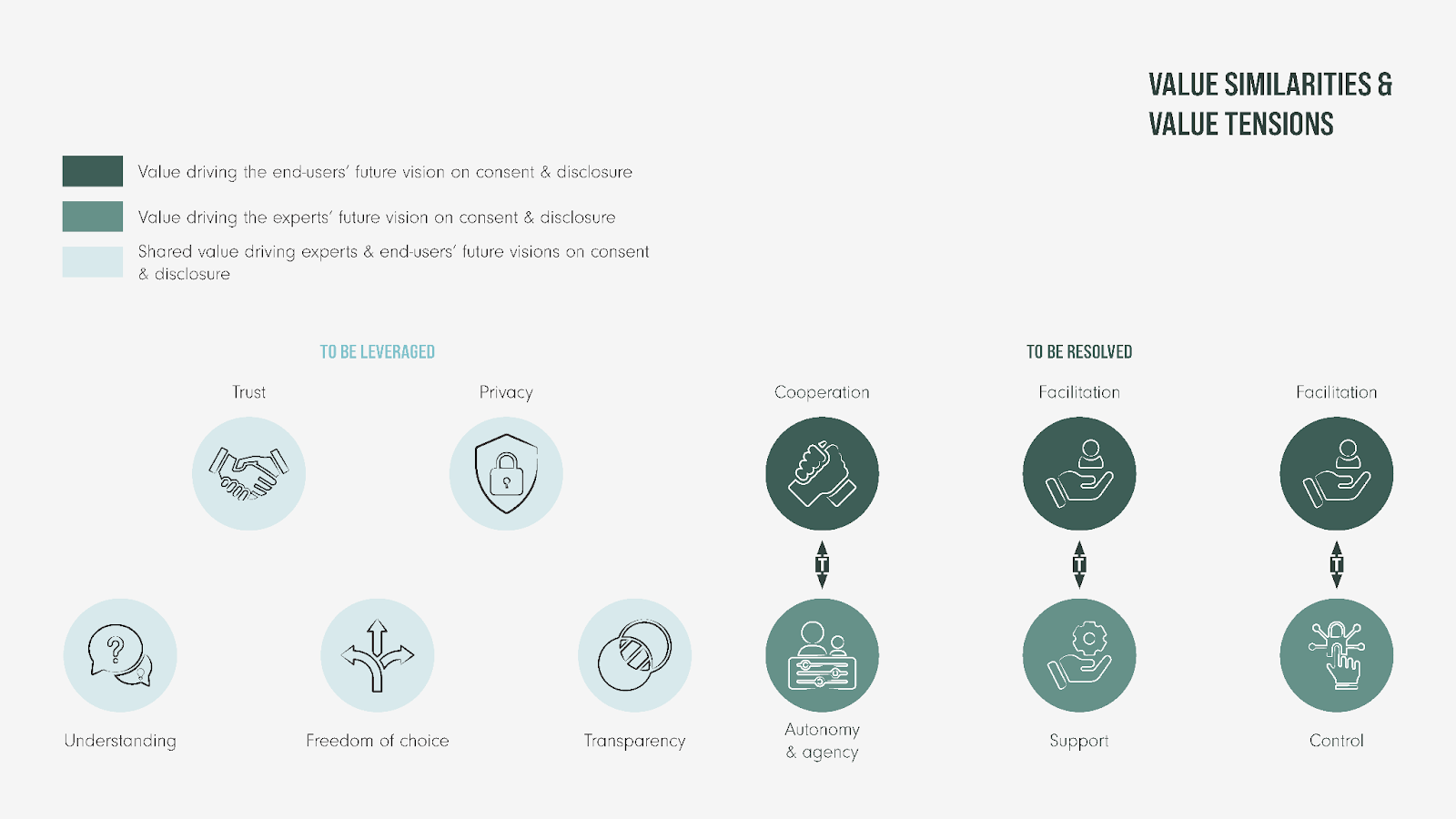

Aniek’s thesis investigated how consent practices and disclosure interactions can be redesigned to build future data practices and digital platform relations, which both digital platform organizations and end-users desire. Experts’ and end-users’ future visions on digital platform relations, data practices, consent practices, and disclosure interactions were examined and compared to identify commonalities (i.e., value similarities). Such commonalities could provide a foundation for exploring solutions as well as identifying fundamental tensions (i.e. value tensions) that need to be resolved to create the conditions in which new practices can be effective and meaningful.

The thesis proposed and demonstrated that consent practices and disclosure interactions in the context of digital platforms can successfully be redesigned by leveraging the set of identified value similarities and resolving the set of identified value tensions (Figure 1).

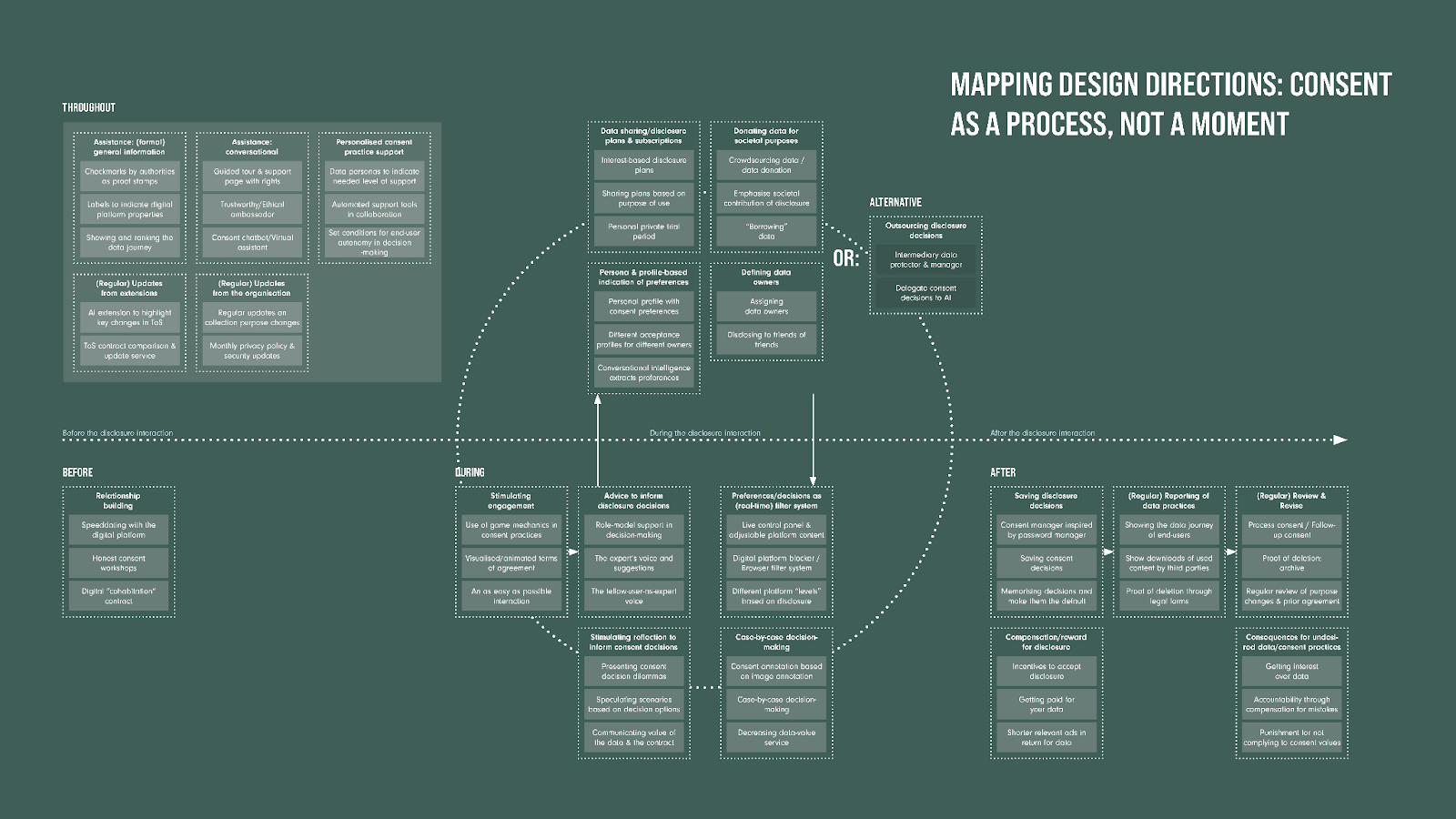

By employing a variety of design methods and tools, a total of 21 design directions – including 88 different ideas – were created. The directions can be categorized and mapped along a timeline. The key takeaway is that consent can be viewed as a process, and therefore embodies a temporal element. With the design directions, you can intervene throughout the entire consent process, as well as before, during and after the disclosure interaction (Figure 2).

A selection of these directions and ideas encompass proposed potential solutions to the 2019 IBM-Flickr case.

- One of the proposed ideas is that end-users of digital platforms can be presented with different data personas. They are profiles of fictional people with characteristics regarding prior knowledge of data practices and consent decisions. End-users select their data personas to indicate how much and what type(s) of support they need throughout their consent journey.

- Another proposed concept is to apply the essence and core functionalities of having a password manager to digital consent practices. A password manager fills in account details while a consent manager automatically fills in disclosure preferences for specific digital platforms.

- One last example is to apply the concept of process consent. People can often not account for everything in their first given consent. Instead, digital platform organizations should provide waypoints whenever policies and/or disclosure purposes are changed. The core of this idea is to focus on the ethical dimension as it should keep end-users up to date on their choices and stimulate them to reflect on previous disclosure decisions.

The conclusion of the thesis is that consent practices should be conceptualized as a process and not as a single moment in time within the context of digital platforms (i.e. the way it is approached currently). This strongly differs from current policies that create the conditions for quick and easy single-moment interactions, such as for the AI_Commons case, but not only that.

What’s next?

On 7 March 2022 , more design-led concepts involving consent practices and disclosure interactions in the context of digital platforms were presented. By taking the AI_Commons case as a starting point, the scalability of “consent as a process” – instead of “consent as a single moment” – were assessed to similar use cases involving the relations with both digital platform organizations and end-users. This provided more clarity on the extent to which design-led perspectives can help in addressing policy gaps around privacy and open licensing in the digital age, such as for the AI_Commons.

The recording of the session is available here.